Stand up for the facts!

Our only agenda is to publish the truth so you can be an informed participant in democracy.

We need your help.

I would like to contribute

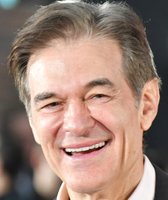

Students work to "Find the Bot" at Stonewall Elementary in Lexington, Ky., Feb. 6, 2023. Students each summarized text about boxer Muhammad Ali then tried to figure out which summaries were penned by classmates and which was written by a chatbot. (AP)

If Your Time is short

-

Experts on adolescent psychiatry and psychology say it’s important to have open and continuous discussion with kids about their use of artificial intelligence and AI chatbots.

-

Parents should set boundaries similar to those they might have for smartphones and internet use. Modeling safe behavior helps.

-

If a child is routinely relying on AI chatbots while withdrawing from peers, family and outside activities, that’s cause for concern and might be a sign to involve a mental health professional.

Editor’s Note: This story contains discussion of suicide. If you or someone you know is struggling with suicidal thoughts, call the National Suicide Prevention Lifeline at 988 (or 800-273-8255) to connect with a trained counselor.

Artificial intelligence loomed large in 2025. As AI chatbots grew in popularity, news reports documented some parents’ worst nightmares: children dead by suicide following secret conversations with AI chatbots.

It’s hard for parents to track rapidly evolving technology.

Last school year, 86% of students reported using artificial intelligence for school or personal use, according to a Center for Democracy & Technology report. A 2025 survey found that 52% of teens said they used AI companions — AI chatbots designed to act as digital friends or characters — a few times a month or more.

How can parents navigate the ever-changing AI chatbot landscape? Research on its effects on kids is in early stages.

PolitiFact consulted six experts on adolescent psychiatry and psychology for parental advice. Here are their tips.

Want to know if and how your kids use AI chatbots? Ask.

Parents should think of AI tools in the same vein as smartphones, tablets and the internet. Some use is okay, but users need boundaries, said Şerife Tekin, a philosophy and bioethics professor at SUNY Upstate Medical University.

The best way to know if your child is using AI chatbots "is simply to ask, directly and without judgment," said Akanksha Dadlani, a Stanford University child and adolescent psychiatry fellow.

Parents should be clear about their safety concerns. If they expect to periodically monitor their children’s activities as a condition of access to the technology, they should be up-front about that.

When families talk regularly and parents ask kids about their AI use, it’s "easier to catch problems early and keep AI use contained," said Grace Berman, a New York City psychotherapist. But perhaps the most important tool is open conversation.

Make curiosity, not judgment, the focal point of the conversation.

Being inquisitive rather than confrontational can help children feel safer sharing their experiences.

"Ask how they are using it, what they like about it, what it helps with, and what feels uncomfortable or confusing," Dadlani said. "Keep the tone non-judgmental and grounded in safety."

Listen with genuine interest in what they have to say.

Ask your child what they believe their preferred AI chatbot knows about them. Ask if a chatbot has ever told them something false or made them feel uncomfortable.

English teacher Casey Cuny, center, helps a student input a prompt into ChatGPT on a Chromebook during class at Valencia High School in Santa Clarita, Calif., Aug. 27, 2025. (AP)

Parents can also ask their children to help them understand the technology, letting them guide the conversation, psychologist Don Grant told the Monitor on Psychology, the American Psychological Association’s official magazine.

"One key message to convey: Feeling understood by a system doesn’t mean it understands you," Tekin said. "Children are capable of grasping this distinction when it’s explained respectfully."

Parents might bring up concerns about AI chatbots’ privacy and confidentiality or the fact that an AI chatbot’s main goal is to affirm them and keep them using the bot. Emphasize that AI is a tool, not a relationship.

"Explain that chatbots are prediction machines, not real friends or therapists, and they sometimes get things dangerously wrong," Berman said. "Frame this as a team effort, something you want your child to be able to make healthy and informed decisions about."

Use the technology’s safety settings, but remember they’re imperfect.

Parents can restrict children to using technology in their home’s common areas. Apps and parental controls are also available to help parents limit and monitor their children’s AI chatbot use.

Berman encourages parents to use apps and parental controls such as Apple Screen Time or Google Family Link to monitor technology use, app downloads and search terms.

Parents should use screen and app-specific time limits, automatic lock times, content filters and, when available, teen accounts, Dadlani said.

"Monitoring tools can also be appropriate," Dadlani said.

With Bark Phones or the Bark or Aura apps, parents can set restrictions for certain apps or websites and monitor and limit online activities.

Parents can adjust AI chatbot settings or instruct children to avoid certain bots altogether.

In some of the AI chatbot cases that resulted in lawsuits, the users were interacting with chatbot versions that had the ability to remember past conversations. Tekin said parents should disable that "memory," personalization or long-term conversation storage.

"Avoid platforms that explicitly market themselves as companions or therapists," she said.

Bruce Perry, 17, shows his ChatGPT history at a coffee shop in Russellville, Ark., July 15, 2025. (AP)

Some chatbots have or are creating parental controls, but that approach is also imperfect.

"Even the ones that do will only provide parental controls if the parent is logged in, the child is logged in, and the accounts have been connected," said Mitch Prinstein, the American Psychological Association’s chief of psychology.

These measures don’t guarantee that kids will use chatbots safely, Berman said.

"There is much we don’t yet know about how interacting with chatbots impacts the developing brain — say, on the development of social and romantic relationships — so there is no recommended safe amount of use for children," Berman said.

Does that mean it’s best to impose an outright ban? Probably not.

Parents can try, but it’s unlikely that parents will succeed in entirely preventing kids — especially older children and teens — from using AI chatbots. And trying might backfire.

"AI is increasingly embedded in schoolwork, search engines, and everyday tools," Dadlani said. "Rather than attempting total prevention, parents should focus on supervision, transparency and boundaries."

Students gather in a common area as they head to classes in Oregon, May 4, 2017. (AP)

Model the behavior you want kids to emulate.

Restrictions aren’t the only way to influence your kids’ interactions with AI chatbots.

"Model healthy AI use yourself," Dadlani said. "Children notice how adults use technology, not just the rules they set."

Prinstein said parents should also model their attitudes toward AI by openly discussing AI with kids in critical and thoughtful ways.

"Engage in harm reduction conversations," Berman said. That might look like asking your child questions such as, "How could you tell if you were using AI too much? How can we work together as a team to help you use this responsibly?"

From there, you can collaboratively set expectations for AI use with your kids.

"Work together to co-create a plan on when and how the family will use AI companions and when to turn to real people for help and guidance," Aguiar said. "Put that plan in writing and do weekly check-ins."

If you have concerns specific to your child’s use, don’t be afraid to ask your child to tell you what the chatbot is saying or ask to see the messages.

Parents should emphasize they won’t be upset or angry about what they find, Prinstein said. It might be useful to remind your child that you’re coming from a place of concern by saying something like, chatbots are "known to make things up or to misunderstand things, and I just want to help you to get the right information," he said.

Replacing in-person relationships with AI interactions is cause for concern.

Parents should look for signs that an AI chatbot is affecting a child’s mood or behavior.

Some red flags that a child is engaged in unhealthy or excessive AI chatbot use:

-

Withdrawal from social relationships and increased social isolation.

-

Increased secrecy or time alone with devices.

-

Emotional distress when access to AI is limited.

-

Disinterest in activities your child used to enjoy.

-

Sudden changes in grades.

-

Increased irritability or aggression.

-

Changes in eating or sleeping habits.

-

Treating a chatbot like a therapist or best friend.

Parents shouldn’t necessarily assume all irritability or privacy-seeking behavior is a sign of AI chatbot overuse. Sometimes, that’s part of being a teenager.

But parents should be on the lookout for patterns that seem in sync with kids’ chatbot engagement, Prinstein said.

"The concern is not curiosity or experimentation," Dadlani said. "The concern is the replacement of human connection and skill-building."

Take note if the child is routinely relying on chatbots — particularly choosing bots’ advice over human feedback — while withdrawing from peers, family and outside activities.

"That is when I would consider tightening technical limits and, importantly, involving a mental health professional," Berman said.

Parents are used to worrying about who their kids spend time with and whether their friends might encourage them to make bad decisions, Prinstein said. Parents need to remember that many kids are hanging out with a new, powerful "friend" these days.

"It’s a friend that they can talk to 24/7 and that seems to be omniscient," he said. "That friend is the chatbot."

PolitiFact Researcher Caryn Baird and Staff Writer Loreben Tuquero contributed to this report.

RELATED: Adam Raine called ChatGPT his ‘only friend.’ Now his family blames the technology for his death

Our Sources

Interview with Mitch Prinstein, the American Psychological Association’s chief of psychology, Dec. 16, 2025

Email interview with Grace Berman, psychotherapist and co-director of community education at The Ross Center, Dec. 15, 2025

Email interview with Naomi Aguiar, associate director of Oregon State University’s Ecampus Research Unit, Dec. 15, 2025

Email interview with Anne Maheux, University of North Carolina at Chapel Hill psychology professor, Dec. 15, 2025

Email interview with Dr. Akanksha Dadlani, child and adolescent psychiatry fellow at Stanford University, Dec. 16, 2025

Email interview with Şerife Tekin, a SUNY Upstate Medical University associate professor at the Center for Bioethics and Humanities, Dec. 16, 2025

Education Week, Rising Use of AI in Schools Comes With Big Downsides for Students, Oct. 8, 2025

The New York Times, How Much Screen Time Is Your Child Getting at School? We Asked 350 Teachers, Nov. 13, 2025

HealthyChildren.org, How AI Chatbots Affect Kids: Benefits, Risks & What Parents Need to Know, accessed Dec, 15, 2025

American Psychological Association, Many teens are turning to AI chatbots for friendship and emotional support, Oct. 1, 2025

Northeastern Global News, New Northeastern research raises concerns over AI’s handling of suicide-related questions, July 31, 2025

NBC News, OpenAI rolled back a ChatGPT update that made the bot excessively flattering, April 30, 2025

Common Sense Media, New Report Shows Students Are Embracing Artificial Intelligence Despite Lack of Parent Awareness and School Guidance, Sept. 18, 2024

Center for Democracy and Technology, Hand in Hand: Schools’ Embrace of AI Connected to Increased Risks to Students, Oct. 8, 2025

Center for Democracy and Technology, Full report: Hand in Hand Schools’ Embrace of AI Connected to Increased Risks to Students, October 2025

Character.AI, How Character.AI Prioritizes Teen Safety, Dec. 12, 2024

OpenAI, Introducing parental controls, Sept. 29, 2025

Bark, Bark Phone, accessed Dec. 17, 2025

Bark, The Bark App, accessed Dec. 17, 2025

Aura, accessed Dec. 17, 2025

CNBC, Meta announces new AI parental controls following FTC inquiry, Oct. 17, 2025

The Associated Press, AI chatbot pushed teen to kill himself, lawsuit alleges, Oct. 25, 2024

NPR, Their teenage sons died by suicide. Now, they are sounding an alarm about AI chatbots, Sept. 19, 2025

Apple Support, Use parental controls to manage your child's iPhone or iPad, accessed Dec. 17, 2025

Common Sense Media, Talk, Trust, and Trade-Offs: How and Why Teens Use AI Companions, 2025

Hopelab, Teen and Young Adult Perspectives on Generative AI, accessed Dec. 15, 2025