Stand up for the facts!

Our only agenda is to publish the truth so you can be an informed participant in democracy.

We need your help.

I would like to contribute

Then-House Minority Leader Nancy Pelosi of Calif. speaks at the 2018 California Democrats State Convention Saturday, Feb. 24, 2018, in San Diego. (Associated Press)

A manipulated video showing House Speaker Nancy Pelosi slurring her speech spread rapidly across social media last week. It also raised questions about what social media platforms, and their users, should do when altered footage of a public official goes viral in the future.

President Trump’s personal attorney Rudy Giuliani amplified the clip when he posted it on Twitter and wrote: "What is wrong with Nancy Pelosi? Her speech pattern is bizarre." Giuliani later deleted his tweet.

News organizations, such as The Washington Post and PolitiFact, debunked the video, showing its audio had been slowed and that it was not an accurate representation of Pelosi’s speech.

RELATED: Viral video of Nancy Pelosi slowed down her speech

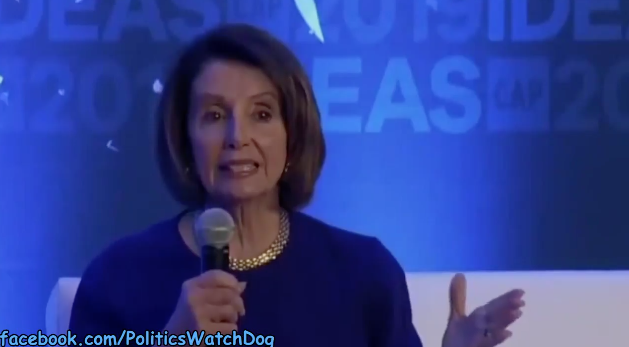

The three-minute clip was first posted on Facebook on May 22 by a website called Politics WatchDog. It was flagged as part of Facebook’s efforts to combat false news and misinformation on its News Feed. (Read more here about PolitiFact’s partnership with Facebook.)

A screenshot from a manipulated video interview of Nancy Pelosi addressing the Center for American Progress that appeared on Facebook on May 22, 2019.

But by the time the video had been shown to be a fake, millions on social media had already viewed it, with some questioning whether the California Democrat was drunk or medically impaired. It had received 2.8 million views as of Memorial Day.

Trump tweeted a separate video also taking a jab at Pelosi. It was produced by Fox Business and shows a compilation of instances in which Pelosi repeated, rephrased or stumbled over some words. The audio was selectively edited, but was not slowed down as was the one posted by Politics Watchdog.

The latest incidents raised many questions.

To seek answers, PolitiFact California spoke with Hany Farid, a UC Berkeley computer science professor and digital forensics expert who analyzed the videos. The interview has been lightly edited for clarity and length.

Q: How were the Pelosi videos altered?

A: There’s two videos floating around. The first one appears to be Speaker Pelosi slurring her words during an interview. That video is actually a pretty low-tech manipulation. They took the original video, which you can by the way find on C-SPAN and see what she actually sounded like in the original, and they slowed it down by about 75 percent, and I think they probably tweaked the audio a little bit to get it to sound more like her. With a really simple manipulation, (they) created a really compelling fake that makes it sound as if she’s slurring her words and that she’s drunk.

The other video that’s been making the rounds is even in some ways a lower-tech fake. All it is is a bunch of verbal slip-ups by Speaker Pelosi, just put back-to-back. It’s not meant to intentionally show that that’s the video. It’s clearly clip after clip after clip. And she was just stumbling on her words. And, frankly if you did that for anybody who talks as much as Speaker Pelosi does, you’d see exactly the same thing. So, that was a little bit more of a mean-spirited and unfair and distorted video.

Q: What larger concerns do these videos raise?

A: The problem is not fundamentally the fake videos, the fake images. The problem is the delivery mechanism, which is primarily these days social media. The fact is that anybody, just about anybody, now can create a fake video, really simple low-tech ones like you saw with Speaker Pelosi, and more sophisticated deep fake types of videos. They can distribute them to the tune of millions and millions of views. The person in the middle, the YouTubes and the Facebooks and the Twitters, are actually doing very little to actually deal with these misinformation campaigns. And therein lies, I think, in some ways the bigger issue here. It’s not just the creation of videos that is being shared among a few friends. It is that you can now impact the general public’s view of our politicians, our candidates, our president and our representatives. I think that if you’ve been paying attention over the past few years, you have seen that these misinformation campaigns, whether they are starting internally or they are from foreign interference, are having an effect on our elections and on our public discourse.

We have to think about these issues not just on the technological side, (such as) how do you detect these fakes? But the (social media) platforms have to think about their policies: What are they going to allow? And what are they not going to allow? And then, of course, the public has to get more discerning. The fact is these videos also would not get as much traction if people were not so quick to comment and share and like and retweet. But everybody is so quick to believe the worst in the people they disagree with that we’ve lost all sense of common sense and civility in our discourse. All of these are, in many ways, sort of the perfect storm brewing because if any of the things I just mentioned didn’t exist, we’d be relatively safer. But when we combine all of these things, the ability to create the technology, the ability to deliver it and the willing public to consume it, I think you have in many ways the perfect storm.

Q: What have social media platforms done?

A: As of Friday afternoon, YouTube has taken down at least some of the versions of the video. Facebook has not.

[Monika Bickert, Facebook’s head of global policy management, said in an interview on CNN late Friday afternoon that "Anybody who is seeing this video in news feed, anyone who is going to share it to somebody else, anybody who has shared it in the past, they are being alerted that this video is false."]

But in some ways, it’s just too late. By the time millions of people have seen these videos, taking it down after the fact is hardly helpful. If we learned anything from the New Zealand (mosque) attacks (which were streamed on Facebook Live) and the distribution of the videos that came after that, YouTube and Twitter and Facebook have struggled (and) continue to struggle to take down these videos. So, I don’t think these platforms are well-equipped to deal with these issues. I don’t think that they have the technology in place, they don’t have the human moderators in place, they don’t have the policies in place. And, frankly, they don’t have the will in place. But at the same time, we recognize that these social media companies are playing a huge role in our public discourse and our democratic elections. And herein lies some real tension, particularly in the lead-up to the 2020 elections.

Q: Is this a First Amendment issue? And isn’t it a slippery slope if YouTube takes down a manipulated video of one politician but not a distorted video of a rival politician? Also, can’t we make fun of our politicians? Where’s the line here as to when social media platforms should act?

A: It’s a fair question. Let’s get a few things straight, though. This is not a First Amendment issue. The First Amendment says Congress shall not impinge on your rights. It says nothing about YouTube or Facebook. These are private companies and they can take down content without infringing on your First Amendment rights. I will mention by the way that YouTube and Facebook do not allow adult pornography on their platforms, which is perfectly protected speech, of course. And nobody is jumping up and down on their head saying you’re infringing on my rights. That’s not to say there aren’t serious issues here. We do have to separate out what are the policies we are willing to accept from these social media platforms.

But let’s not couch it as a First Amendment right. You don’t have a fundamental right to say whatever you want on YouTube and Facebook. You have to obey the terms of service. Having said that, I think you’re right, if this was meant to be satire or funny, then I think most of us would agree that it’s fine. But that’s not how it’s been played.This is not satire, this is not The Onion, it’s not being used for comedic relief. It is being used to smear one of our politicians and I think that plays in a different way.

YouTube has 500 hours of video uploaded a minute. Facebook has billions of uploads a day. These platforms operate at a scale where they can’t govern themselves. So, you have two questions here. One is: Can we put sensible and thoughtful policies in place that find a balance between an open and free internet and hateful speech and dangerous speech? And can we then actually implement those policies? I think both of those are very, very difficult questions. I think the social media platforms have been way too slow to recognize the misuse of their platforms. I think now we are playing catch up and that is obviously much, much harder than if we had put these safeguards in place in the first place.

Q: Will we see more of these fake videos as we get further into the 2020 election cycle? And what can the average media consumer do about them?

A: If you look at the last few years, it doesn’t take a stretch of the imagination to say that it is likely that we’ll see more things like this in the coming year and a half. We have been seeing misinformation campaigns, whether that’s domestic or foreign interference over the last two years across the spectrum both here and abroad. And I don’t think I’m reaching here to say this will probably continue. So, what can we as the public do? There’s sort of two things I think we can do today. One, and perhaps this is the most important thing, is to slow down. Everybody is too quick to be outraged on social media. Everybody is too quick to retweet and share and comment without really thinking through the plausibility of what they’re hearing or seeing. I think that’s a fixable problem. We just have to become better digital citizens than we are, frankly.

I think the platforms have to get more serious about misinformation campaigns. And then I think the campaigns have to start thinking about how they authenticate the content of their candidate. There’s actually some technological solutions. They are called control capture imaging systems. This is an app you can download to your phone. You can record an image or a video. And at the time of recording, it cryptographically signs the content, puts it on the blockchain, which is an immutable ledger. That way you can verify the authenticity of that media in the future.

Our Sources

Hany Farid, computer science professor and digital forensics expert, UC Berkeley, phone interview May 24, 2019

Facebook, Politics Watchdog post, May 22, 2019

Twitter, @realdonaldtrump tweet, May 23, 2019

PolitiFact, Viral video of Nancy Pelosi slowed down her speech, Friday, May 24, 2019

The Washington Post, Faked Pelosi videos, slowed to make her appear drunk, spread across social media, May 24, 2019

CNN.com, Cooper grills Facebook VP for keeping Pelosi video up, May 24, 2019

CSPAN, Speaker Pelosi at CAP Ideas Conference, May 22, 2019