Get PolitiFact in your inbox.

(Shutterstock)

Amid a presidential election, a global pandemic and nationwide unrest, more Americans are using social media in 2020 than ever before.

Facebook reported in July that its daily users had surged to 1.79 billion — a 12% increase from the year before. In the United States, more than 220 million people use the platform at least once a month.

Twitter has also reported strong growth this year, even as it eliminates fake accounts. TikTok’s user base has grown about 800% since 2018, and it now has more than 100 million monthly users in the U.S. alone.

A lot of that growth came as Americans were told to stay in their homes and social distance to slow the spread of the coronavirus. But while social media may help people stay connected during the pandemic, it can also serve as a vehicle for misinformation.

Since January, PolitiFact has fact-checked hundreds of false and misleading claims about the coronavirus, the presidential race, natural disasters and nationwide protests against police brutality. Many of the claims were posted on Facebook, Instagram or other social media websites.

Sign up for PolitiFact texts

What are those companies doing about it? How do they decide which posts to fact-check or remove altogether — if they take any action at all?

To find out, we read the rulebooks published by Facebook, Instagram, Twitter, YouTube and TikTok. Here’s our guide to how those social media companies are tackling falsehoods in the final weeks of the election.

Have another question we didn’t answer here? Send it to truthometer@politifact.com.

Facebook and Instagram | Twitter | YouTube | TikTok

Facebook and Instagram

The largest social media company in the world has the largest fact-checking program to address misinformation on its platform. Its policies are detailed, with a notable carveout for politicians. Here are a few key facts about how Facebook and Instagram, which the company owns, address misinformation.

Does it have a fact-checking partnership?

Yes.

Since 2016, Facebook has partnered with independent fact-checking organizations around the world (including PolitiFact) to fact-check false and misleading posts. Only fact-checkers that are signatories of the International Fact-Checking Network’s Code of Principles are eligible for inclusion in the program, which spans dozens of countries on six continents, with 10 partners in the U.S.

Here’s how it works:

-

Fact-checking outlets that partner with Facebook are given access to a dashboard that surfaces posts that contain potentially false and misleading claims.

-

When an outlet fact-checks a post, the content is flagged on Facebook and Instagram with an overlay and a link to the fact-checker’s story. Users can still see the content.

-

If a fact-checker rates a post as False, Altered or Partly False, its reach is reduced in the News Feed, as well as on Instagram’s Explore and hashtag pages.

-

Pages and domains that repeatedly share False or Altered content may see their distribution reduced and their ability to monetize revoked. Some may also lose their ability to register as a news page on Facebook.

Read more about PolitiFact’s partnership with Facebook here.

What does it do with false or misleading posts?

It depends on the subject matter of the post, but Facebook tends to flag misinformation on its platform rather than remove it altogether.

Facebook relies on its partnership with fact-checkers to downgrade false and misleading content on its platform, flagging it with links to related fact-checks. In August, Facebook also started labeling posts about voting, regardless of their accuracy. Those labels link users to a voter information hub with logistical details about how to cast a ballot.

Some misinformation gets removed altogether. Facebook is willing to remove content that:

-

Includes "language that incites or facilitates serious violence;"

-

Constitutes fraud or deception;

-

"Expresses support or praise" for terrorist, criminal or organized hate groups;

-

Constitutes hate speech;

-

Includes "manipulated media."

In practice, Facebook has applied those policies to ban pages and groups that support QAnon, a baseless conspiracy theory about child sex trafficking; to remove content that promotes bogus cures for COVID-19; and to take down false information about voting requirements or the situation at polling places.

For example, in May, Facebook removed a viral documentary called "Plandemic" that included a slew of false and unproven claims about the coronavirus. The company did the same thing with a sequel in August, which resulted in far fewer people seeing the video.

Despite Facebook’s preference for fact-checkers to address false and misleading posts, sometimes it preemptively takes action against content that may be inaccurate. That was the case Oct. 14, when Facebook, along with Twitter, reduced the reach of a New York Post story about Democratic presidential nominee Joe Biden and his son, Hunter.

What about posts from politicians and candidates?

It’s complicated.

Politicians are exempt from Facebook’s fact-checking policy. That means that, if a politician or political candidate posts false or misleading claims from their official accounts, those claims will not be flagged as misinformation.

"By limiting political speech we would leave people less informed about what their elected officials are saying and leave politicians less accountable for their words," the company says in its fact-checking rules.

But there are a few exceptions to Facebook’s policy.

If a politician shares a link, photo or video that a fact-checker has debunked, it may still be flagged since Facebook considers that to be "different from a politician’s own claim or statement." And Facebook has removed several of President Donald Trump’s posts for spreading misinformation about COVID-19, which the platform does not allow.

Does it allow political ads?

Yes — at least until Election Day.

Facebook announced it would ban political ads after polls close on Nov. 3. The company said the goal of the move is to "reduce opportunities for confusion or abuse," as it will probably take longer for states to count mail-in ballots, delaying the results.

Facebook also said it will ban new political ads in the week before Election Day, as there may not be enough time to contest misleading claims contained in them.

Until recently, Twitter had taken a hands-off approach to misinformation. The microblogging platform has started to label posts that contain misleading or disputed claims about COVID-19 and the election. Here’s what you need to know.

Does it have a fact-checking partnership?

No.

What does it do with false or misleading posts?

Twitter has adopted a piecemeal approach to addressing misinformation. In many cases, inaccurate claims are allowed to stay on the platform.

The company uses a combination of cautionary flags and post removals to enforce its policies against false and misleading information. Twitter has targeted three main areas of misinformation for policing — elections, COVID-19 and "manipulated media."

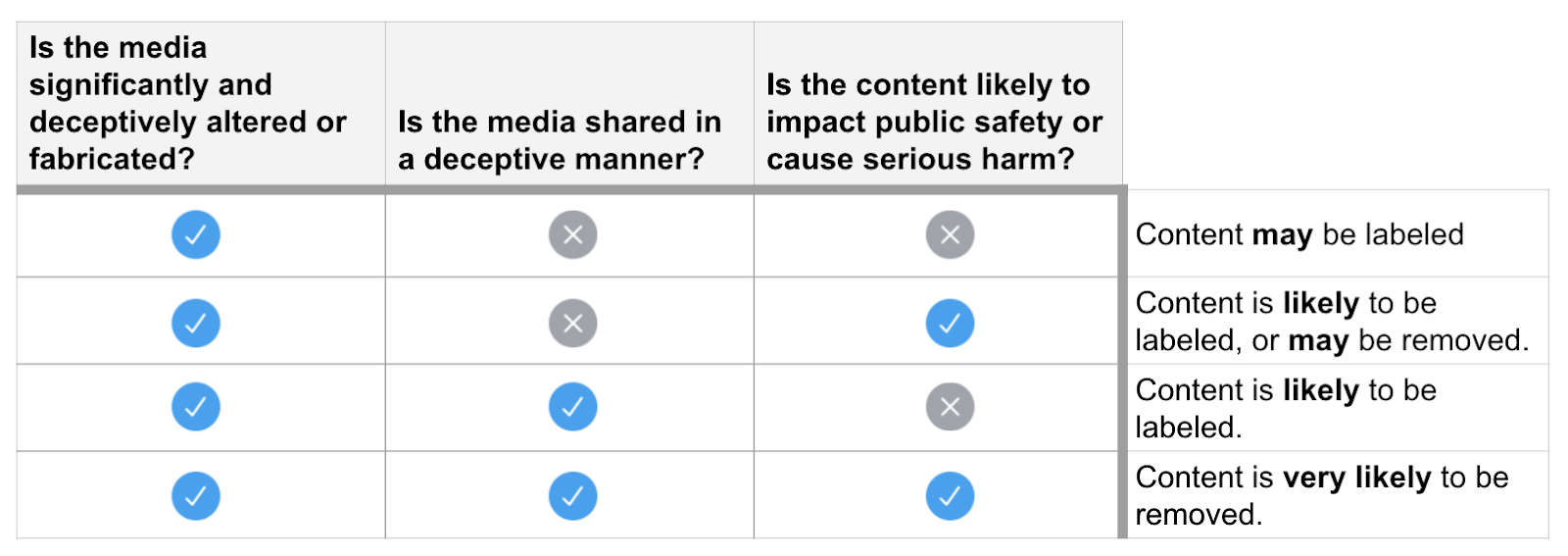

In February, the company introduced a policy that says users "may not deceptively share synthetic or manipulated media that are likely to cause harm." Twitter may label or remove photos or videos that are:

-

Altered or fabricated;

-

Shared out of context;

-

Likely to cause "serious harm" or affect public safety.

(Graphic from Twitter)

In March, the company introduced a blanket ban on coronavirus-related content that increases the chance that someone contracts or transmits the virus. That includes tweets that deny expert guidance on COVID-19, promote bogus treatments or cures, or promote misleading content purporting to be from experts or authorities.

When it comes to elections, Twitter may "label and reduce the visibility of tweets containing false or misleading information about civic processes," according to the company’s civic integrity policy. That includes misinformation about elections, censuses and ballot initiatives.

The company also says it removes some posts that include misinformation on how to vote, as well as tweets that aim to undermine confidence in civic processes. However, Twitter allows "inaccurate statements about an elected or appointed official, candidate, or political party."

In May, Twitter updated its policy on misleading information to introduce new labels and warnings. Here’s how it works:

-

If Twitter deems something is "misleading information," the company will either append a label or remove the post altogether.

-

If a tweet is a "disputed claim," it may be labeled or the account that posted it will receive a warning.

-

If Twitter says something is an "unverified claim," nothing happens.

What about posts from politicians and candidates?

Posts from politicians are fair game for removal or labeling. In fact, they may be more likely to see their tweets labeled, since Twitter considers them to be "in the public interest."

This has happened to Trump’s tweets a few times.

In May, Twitter labeled two of Trump’s tweets for the first time. Beneath a post that claimed mail-in ballots would be "substantially fraudulent," the company included a link to a Twitter Moment with verified information about voting.

Since then, the company has labeled several more of the president's tweets. It has also deleted tweets that Trump shared from his account, and even locked his account until Trump deleted his own tweets that violated Twitter’s policy.

Does it allow political ads?

No — Twitter banned them in October 2019.

(AP)

YouTube

When it comes to misinformation, YouTube has mostly taken cues from Google, its parent company. The video-sharing website tends to rely on adding additional context to videos about certain topics and suppressing videos that contain misinformation.

Does it have a fact-checking partnership?

Not really.

YouTube announced in spring 2019 that it had started surfacing articles from fact-checkers in search results that were "prone to misinformation." But those fact-checks aren’t applied to specific videos, and the company itself does not partner with independent fact-checking outlets.

What does it do with false or misleading posts?

It depends on the subject matter. In general, YouTube has a more hands-off approach than other platforms.

YouTube is similar to other platforms in that it does not allow content that incites people to violence, impersonates another person or organization, or promotes spam. The company removes content that violates its policies, such as:

-

Misinformation about how to treat, prevent or slow the spread of COVID-19;

-

False claims about potential coronavirus vaccines;

-

Videos that include misleading or deceptive thumbnails;

-

Manipulated photos or videos;

-

False claims about how, where or when to vote or participate in the census;

-

Inaccurate or misleading claims about a candidate’s eligibility for office.

For example, along with Facebook, YouTube removed the "Plandemic" documentary for violating its policies against coronavirus misinformation. In July, the company also took down a viral video of doctors making false claims about hydroxychloroquine and COVID-19.

YouTube says it uses "external human evaluators" to assess whether videos promoted conspiracy theories or inaccurate information. That information feeds back into what videos YouTube recommends to its users.

However, YouTube differs from other social media companies in that it doesn’t label single pieces of misinformation. Instead, the platform labels videos from certain sources and those that deal with controversial topics.

For example, YouTube labels videos published by state-supported media outlets like Russia Today and PBS with links to their descriptions on Wikipedia (although enforcement has been spotty). The platform is also labeling videos about mail-in voting with a link to a report from the Bipartisan Policy Center.

YouTube was the last major social media company to ban content from QAnon supporters. On Oct. 15, the platform announced that it updated its hate and harassment policies to "prohibit content that targets an individual or group with conspiracy theories that have been used to justify real-world violence," including QAnon and Pizzagate.

What about posts from politicians and candidates?

While it doesn’t label individual false or misleading videos, YouTube has removed content published by politicians.

In December 2019, "60 Minutes" reported that YouTube had removed hundreds of Trump ads for violating its policies. (The company did not say which policies.) In August, YouTube removed a video from the Trump campaign for violating its rules against coronavirus misinformation. It showed the president in a "Fox & Friends" interview saying children are "almost immune" to COVID-19.

Does it allow political ads?

Yes. But like other platforms, YouTube has created restrictions on political ads around Election Day.

(AP)

TikTok

TikTok, the video-sharing app popular among teens and young adults, is the most aggressive when it comes to taking down misinformation.

Does it have a fact-checking partnership?

Yes.

Since summer 2020, TikTok has partnered with fact-checking outlets (including PolitiFact) to review potential political misinformation. Here’s how it works:

-

TikTok surfaces potential election-related misinformation to fact-checking partners.

-

Fact-checkers examine the videos and submit their findings.

-

TikTok uses fact-checkers’ assessments to remove misinformation. Sometimes the company will reduce its reach instead. It does not label misinformation.

"Fact-checking helps confirm that we remove verified misinformation and reduce mistakes in the content moderation process," the company said in August.

Read more about PolitiFact’s partnership with TikTok here.

What does it do with false or misleading posts?

In most cases, TikTok removes misinformation.

According to the company’s community guidelines, TikTok does not allow several types of false or misleading information on its platform, including:

-

Posts that incite hate or prejudice;

-

Misinformation that could cause panic;

-

Medical misinformation;

-

Misleading posts about elections or other civic processes;

-

Manipulated photos or videos.

Similar to other platforms, TikTok also does not permit users to post spam, impersonate other people or organizations, or promote hate speech or criminal activities. TikTok may reduce the discoverability of violating content — including misinformation — on the For You page or redirect hashtags and search results that surface it. The company has used those tactics to block content that promotes QAnon.

"When we become aware of content that violates our Community Guidelines, including disinformation or misinformation that causes harm to individuals, our community or the larger public, we remove it in order to foster a safe and authentic app environment," the company says in its election-related guidelines.

What about posts from politicians and candidates?

TikTok’s rules against misinformation apply to politicians.

"Our Community Guidelines apply to everyone who uses TikTok and all content on the platform, so no exceptions," said Jamie Favazza, a spokesperson for the company.

Does it allow political ads?

No. TikTok is the only platform that doesn’t aside from Twitter.

Our Sources

Associated Press, "Facebook beefs up anti-misinfo efforts ahead of US election," Aug. 12, 2020

Axios, "Facebook will ban new political ads a week before Election Day," Sept. 3, 2020

Axios, "Scoop: Google to block election ads after Election Day," Sept. 25, 2020

BBC, "QAnon: TikTok blocks QAnon conspiracy theory hashtags," July 24, 2020

BBC, "Trump Covid post deleted by Facebook and hidden by Twitter," Oct. 13, 2020

Business Insider, "Twitter said it had to temporarily lock Trump's account after he shared a columnist's email address, violating the company's policies," Oct. 6, 2020

CNBC, "TikTok reveals detailed user numbers for the first time," Aug. 24, 2020

CNN, "Facebook removes Trump post falsely saying flu is more lethal than Covid," Oct. 6, 2020

CNN, "YouTube CEO won't say if company will ban QAnon," Oct. 12, 2020

Email from Jamie Favazza, TikTok spokesperson, Oct. 13, 2020

Facebook, Community Standards, accessed Oct. 12, 2020

Facebook, Fact-Checking on Facebook, accessed Oct. 12, 2020

Facebook, "Preparing for Election Day," Oct. 7, 2020

Facebook, Program Policies, accessed Oct. 12, 2020

Facebook, Voting Information Center, accessed Oct. 12, 2020

Facebook Journalism Project, Where We Have Fact-Checking, accessed Oct. 15, 2020

Fox Business, "Politicians Twitter has fact-checked," June 17, 2020

Google, COVID-19 Medical Misinformation Policy, accessed Oct. 13, 2020

Google, See fact checks in YouTube search results, accessed Oct. 13, 2020

Google, Spam, deceptive practices & scams policies, accessed Oct. 13, 2020

The Hill, "TikTok rolls out new political misinformation policies," Aug. 5, 2020

The Hill, "Twitter labels Trump tweet on coronavirus immunity as 'misleading,’" Oct. 11, 2020

Los Angeles Times, "Twitter reports surprisingly strong user growth and revenue," July 26, 2019

NBC News, "Facebook bans QAnon across its platforms," Oct. 6, 2020

The New York Times, "Twitter Will Ban All Political Ads, C.E.O. Jack Dorsey Says," Oct. 30, 2019

NPR, "Facebook And Twitter Limit Sharing 'New York Post' Story About Joe Biden," Oct. 14, 2020

NPR, "Twitter, Facebook Remove Trump Post Over False Claim About Children And COVID-19," Aug. 5, 2020

PolitiFact, "Fact-checking a video of doctors talking about coronavirus, hydroxychloroquine," July 28, 2020

PolitiFact, "Fact-checking ‘Plandemic’: A documentary full of false conspiracy theories about the coronavirus," May 8, 2020

PolitiFact, "Fact-checking ‘Plandemic 2’: Another video full of conspiracy theories about COVID-19," Aug. 18, 2020

PolitiFact, "Fact-checking Trump, Twitter enters uncharted waters," May 28, 2020

PolitiFact, "Prepare for the weirdest election 'night' ever," Sept. 1, 2020

Poynter, "It’s been a year since Facebook partnered with fact-checkers. How’s it going?" Dec. 15, 2017

Poynter, "YouTube is now surfacing fact checks in search. Here’s how it works." March 8, 2019

ProPublica, "YouTube Promised to Label State-Sponsored Videos But Doesn’t Always Do So," Nov. 22, 2019

Reuters, "Exclusive: Facebook to ban misinformation on voting in upcoming U.S. elections," Oct. 15, 2018

Reuters, "Facebook, Twitter, YouTube pull Trump posts over coronavirus misinformation," Aug. 5, 2020

Reuters, "TikTok faces another test: its first U.S. presidential election," Sept. 17, 2020

Reuters, "YouTube bans coronavirus vaccine misinformation," Oct. 14, 2020

Statista, Number of Facebook users in the United States from 2017 to 2025, accessed Oct. 15, 2020

TikTok, "Combating misinformation and election interference on TikTok," Aug. 5, 2020

TikTok, Community Guidelines, accessed Oct. 12, 2020

TikTok, Integrity for the US elections, accessed Oct. 13, 2020

TikTok, "Understanding our policies around paid ads," Oct. 3, 2019

Tweet from Andy Stone, Facebook spokesperson, Oct. 14, 2020

Tweet from Twitter Safety, March 16, 2020

Twitter, "Building rules in public: Our approach to synthetic & manipulated media," Feb. 4, 2020

Twitter, Civic integrity policy, accessed Oct. 12, 2020

Twitter, "Defining public interest on Twitter," June 27, 2019

Twitter, The Twitter Rules, accessed Oct. 12, 2020

Twitter, "Updating our approach to misleading information," May 11, 2020

The Verge, "YouTube adds links to fight mail-in voting misinformation ahead of Election Day," Sept. 24, 2020

Wall Street Journal, "Facebook to Ban Posts About Fake Coronavirus Cures," Jan. 31, 2020

The Washington Post, "Twitter deletes claim minimizing coronavirus death toll, which Trump retweeted," Aug. 31, 2020

The Washington Post, "Twitter slaps another warning label on Trump tweet about force," June 23, 2020

The Verge, "Facebook usage and revenue continue to grow as the pandemic rages on," July 30, 2020

YouTube, "Authoritative voting information on YouTube," Sept. 24, 2020

YouTube, Community Guidelines, accessed Oct. 12, 2020

YouTube, How does YouTube combat misinformation? Accessed Oct. 13, 2020

YouTube, "How YouTube supports elections," Feb. 3, 2020

YouTube, "Managing harmful conspiracy theories on YouTube," Oct. 15, 2020

"60 Minutes," "300+ Trump ads taken down by Google, YouTube," Dec. 1, 2019